Everything you need to know about building ChatGPT apps

We’ve been building a wide variety of ChatGPT apps over the past few days since the release of the ChatGPT Apps SDK, and have some hot-off-the-presses intel to share to help fellow builders.

A ChatGPT App is:

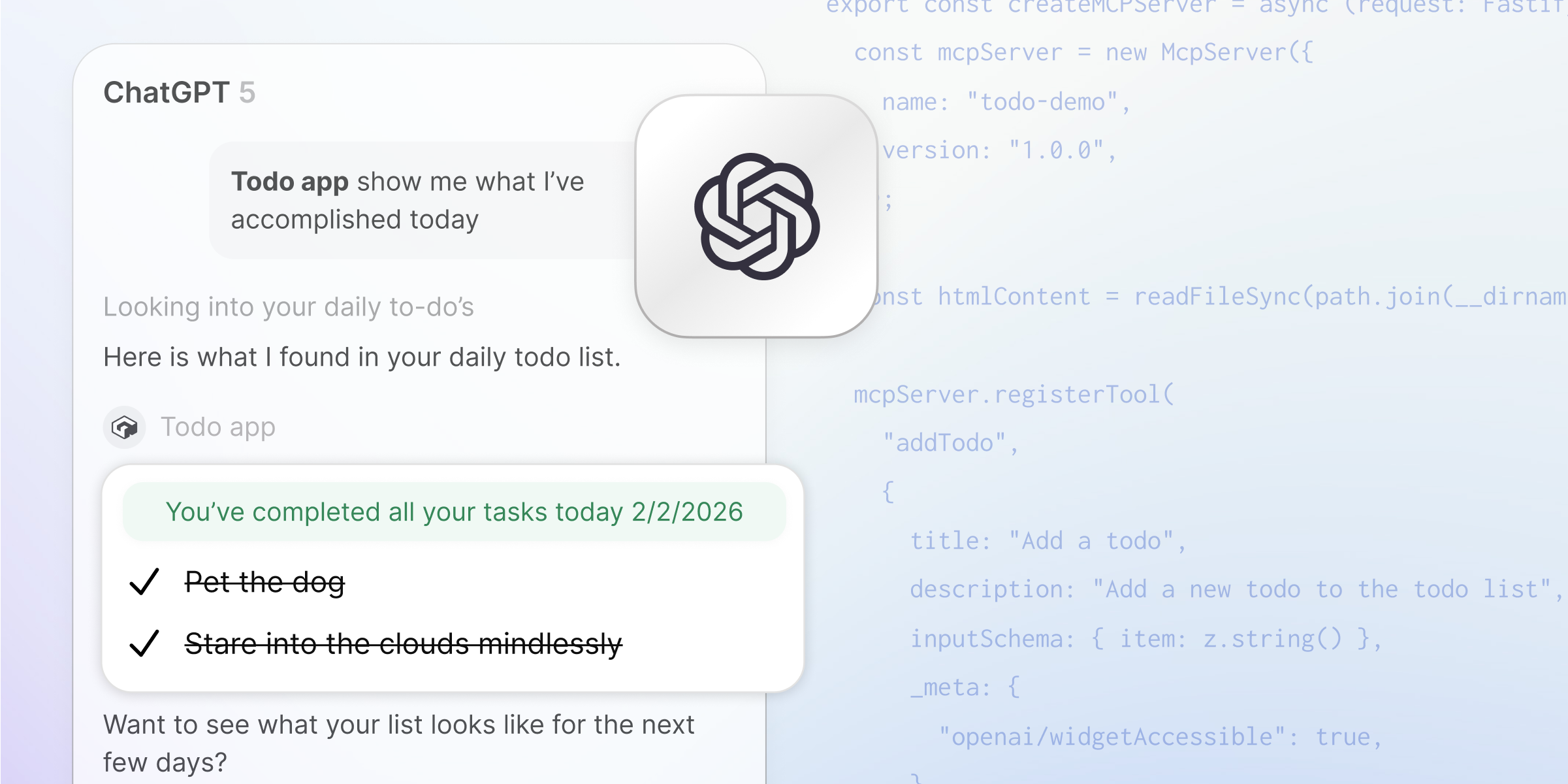

- An MCP server, conforming to the normal Model Context Protocol, not too different from the MCPs everyone has already been building

- An extension to the protocol that allows apps to render UI within a conversation, but only when asked by the user in the conversation

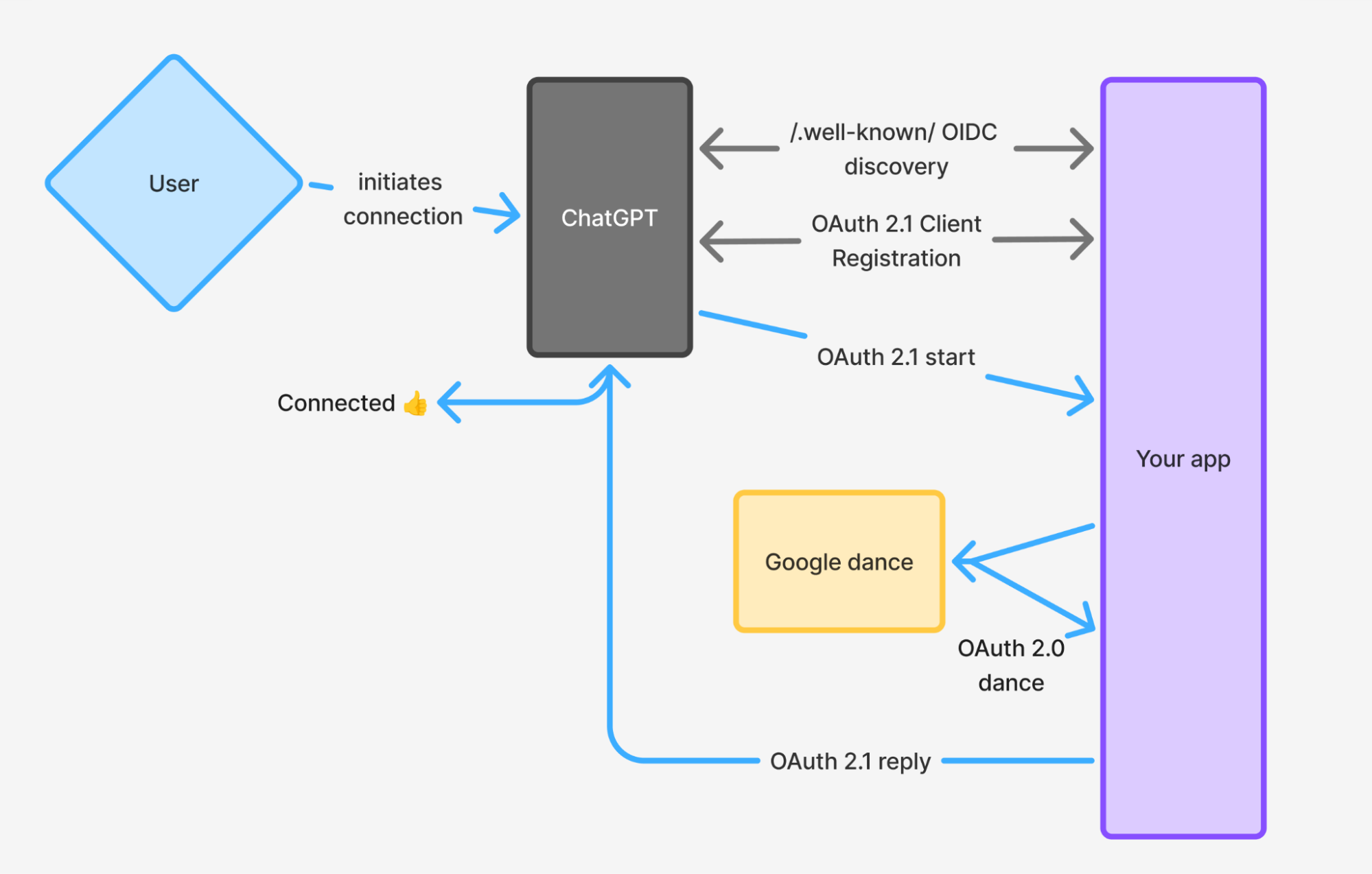

- An optional OAuth 2.1 server and OIDC discovery implementation that allows ChatGPT to authenticate with your backend and prove it is who it says it is

We’re going to go through each of these pieces and share all the nitty gritty details about the ChatGPT ecosystem that we’ve learned so far.

Building MCPs

Building MCP servers has come a long way since the early days. The <inline-code>@modelcontextprotocol/sdk<inline-code> TypeScript package has a lot of what you need to serve your app securely over HTTPS. ChatGPT’s examples repo has a decent project starter that shows how to get up and running.

Curiously though, ChatGPT’s examples use the older SSE version of the protocol, instead of the newer Streamable HTTP version of the protocol, despite ChatGPT itself supporting both. The examples also use a decidedly unscalable and unstable in-memory session map that breaks each time you deploy, and will not work well at all on serverless platforms. After some thorough testing, we can say that ChatGPT supports the Streamable HTTP version of the MCP just fine, and that you should just use it! The <inline-code>StreamableHTTPServerTransport<inline-code> class from <inline-code>@modelcontextprotocol/sdk<inline-code> supports this just fine.

Here’s almost the entire guts of an MCP server built on Fastify using this approach:

We also found that testing using the MCP Inspector was a lot easier than testing in ChatGPT. ChatGPT’s error responses are not informative at all so it can be really hard to tell what went wrong when trying to get things going:

We recommend using the Inspector to validate conformance with the MCP/OAuth 2.1 to start, and then heading over to ChatGPT at the end once you’re confident the basics are working.

Implementing Auth

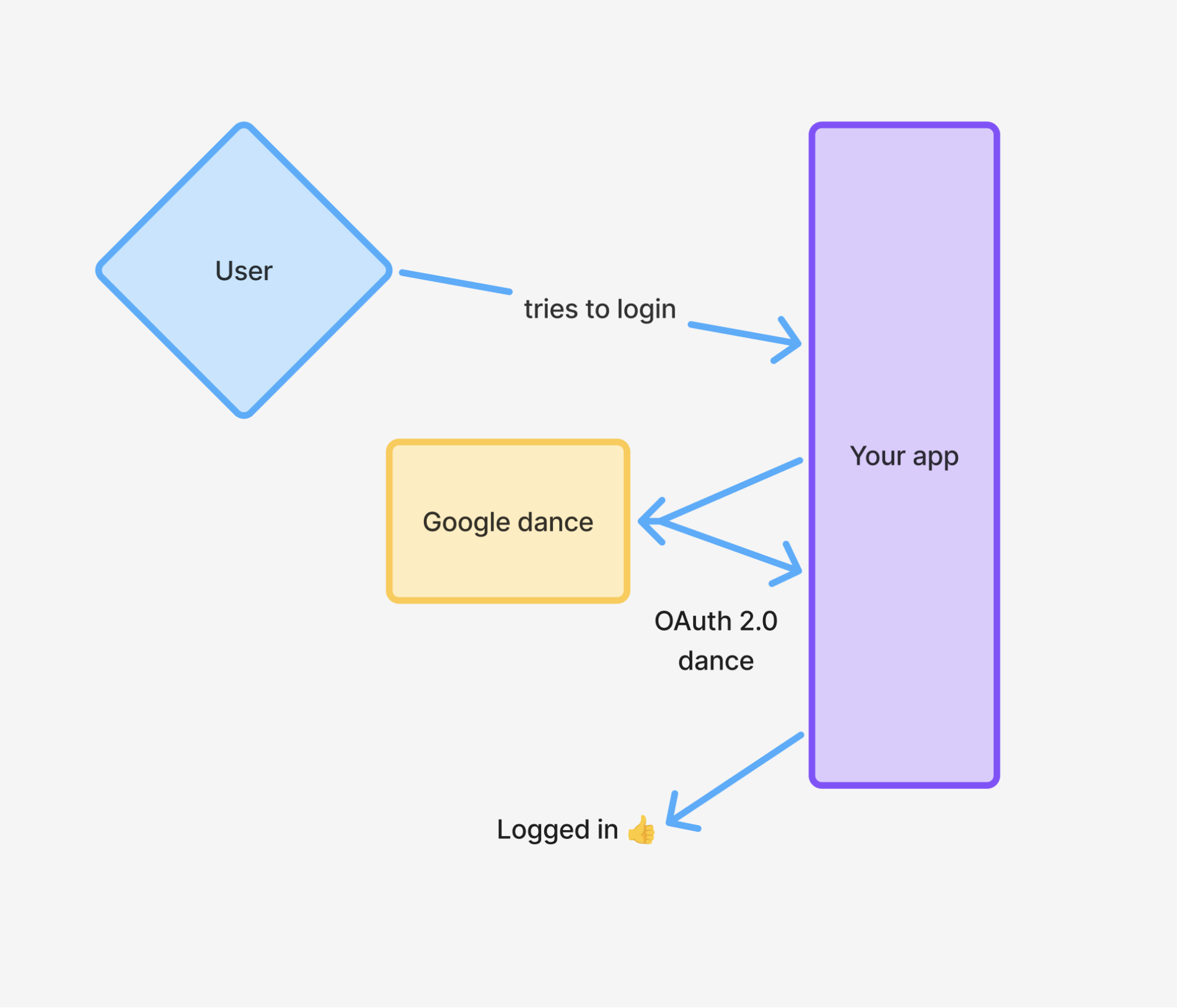

ChatGPT apps use OAuth 2.1, and expect your app to be an OAuth Provider, not an OAuth client. Serving OAuth 2.1 has a whole lot more to it than authenticating with a OAuth 2.0 provider like you might be used to. Usually, if you’re implementing “Sign on with Google”, you can use an off the shelf OAuth 2.0 library and send logged out users into Google to do the dance and come back with an access token. It looks something like this:

With ChatGPT apps, this is backwards! Instead of redirecting a user to a different provider, you must be the provider to OpenAI, facilitating the dance and sending back a token! You also must implement some special endpoints for the OIDC discovery protocol that allows ChatGPT to discover how to send a user in to get a token in the first place. None of this is rocket science but it all adds up. If you’re using an off the shelf auth provider like Gadget, Auth0 or Clerk, you get a lot of this for free which is nice, but you still need to know what's happening to debug it when it breaks.

It looks something like this:

Building Widgets

The most exciting new capability of the ChatGPT Apps SDK is the ability to serve UI to users alongside your other MCP responses. You can feed text data to the LLM in ChatGPT, which has always been possible with MCPs, but now, you can feed interactive pixels to users! For example, I connected the Figma app to my ChatGPT, and asked it to generate a SaaS architecture diagram. Figma’s MCP did its fancy autogeneration thing under the hood, and replied with a UI widget that is interactive:

This makes so many interesting new experiences possible within ChatGPT, but boy oh boy are we early. These widgets are actually sandboxed iframes under the hood. Your MCP server tells ChatGPT to render a particular HTML document, and then that HTML can do all the stuff HTML can normally do – load up CSS and JS, render content, accept user interactions, make <inline-code>fetch<inline-code> requests, etc. ChatGPT pretty much enforces that these widgets are client side single page applications, because you can’t render dynamic HTML – it must be static as it is cached once when the ChatGPT app is installed, and can’t be dynamic per user.

So, instead of any trusty and true server side rendering, you must develop with React or similar, and endure all the “joys” that come along with that. ChatGPT accepts a very plain HTML document, with no support for TypeScript, Tailwind, asset bundling or anything like that, so in development, you must figure out how to bundle and serve your client-side app in a cross-origin safe way into this iframe. Getting this going is finicky, laborious, and hard to debug.

The good news is there’s lots of development tooling that helps! We’re big Vite-heads at Gadget, so we reached for Vite to power TypeScript compilation, bundling, hot-module-reloading (HMR), Tailwind support, etc etc to make widget development easier. We’ve put together a robust Vite plugin that makes it easy to mount a ChatGPT Widget server in any Vite-based app, Gadget-powered or otherwise. You can find it on Github.

Using Vite to power HMR also helps us get past one of the major annoyances we encountered building ChatGPT apps with a static HTML file: every time we update our HTML file, we would need to refresh our MCP connection to load up the latest changes. The dev and debugging loop was so slow.

Another helpful tidbit for TypeScript users: OpenAI has published some types for the window.openai object in their examples repo here.

Talking to your backend

Once you’ve got a widget rendering on the client side, you often want to read or write more data to your backend in response to user interactions. In a todo list app for example, we need to make a call when users add a new todo, or mark a todo as done.

There’s two ways to do this:

- Using the <inline-code>window.openai<inline-code> object to make more calls to your MCP server.

- Using a cross-origin <inline-code>fetch<inline-code> or whatever normal API call machinery you might make to your backend

The <inline-code>window.openai<inline-code> object is injected into the iframe and provided by OpenAI for making programmatic calls to your backend and the ChatGPT UI state. With it, you can make imperative calls to your MCP, like so:

This is perhaps a bit strange because the MCP’s tools are usually designed for consumption by the LLM behind the scenes in ChatGPT, but here, we’re calling them from our own code as if they are our backend API. There’s nothing wrong with this, but it can be a bit awkward, as MCP tools are often designed to be easily consumed by the LLM with different versions of your app’s inputs and outputs that are most LLM friendly.

We also find that <inline-code>window.openai<inline-code> is kind of slow because it has to roundtrip through OpenAI’s backends to then make the call to your MCP server, rather than connecting directly to your app’s backend as you might normally do in a single page app.

However, using <inline-code>window.openai<inline-code> for tool calls has one major benefit, which is that you get authentication for free. ChatGPT manages the OAuth token for you, sending it back as an <inline-code>Authorization: Bearer <token><inline-code> header with each MCP request, so you are re-using the same auth mechanism you already had to set up for your MCP.

Using your own API

If you have an existing API, or if you want to avoid the complexities of the MCP in making API calls, you can still use <inline-code>fetch<inline-code> directly in your widget code. There’s a couple key things to be aware of however:

- You’re now on your own for auth. As best we can tell, the OAuth token that ChatGPT fetches is not available client side in your Widget iframe, so you don’t have any way to know which user is viewing the widget currently. We think this is a missing function of the OpenAI SDK and opened a Github issue about it here.

- The LLM can no longer “see” tool calls or changes made by these API calls. When using <inline-code>window.openai<inline-code> , we’re pretty sure that interactions get added to the conversation behind the scenes such that ChatGPT “understands” what has happened the next time the user writes a prompt. The user can’t see these tool calls, but ChatGPT can, which gives it the most up to date understanding of what has happened in the conversation.

For these reasons, our base recommendation internally has become to use <inline-code>window.openai<inline-code>. It works, it has auth for free, and it shows the LLM what’s happened, so it seems like the right default.

Cross Origin Emotional Damage

CORS for MCP and widget development gets in the way a LOT. There’s no real way around it – OAuth 2.1 requests are made cross origin, and for Widgets, your code is running on a different origin, and so all the browser’s security enforcements for cross-origin code will apply. We had to fight a lot to get all the configs dialed in just right.

If you want to fast forward through this pain, Gadget has a forkable app template with the correct CORS config and widget development plugins set up here , but read on if you’d like to configure this yourself.

Generally speaking, there’s three CORS configs to be aware of:

- The CORS headers for your MCP routes, served by your app’s backend to ChatGPT MCP requests and the Inspector

- The CORS headers for your OAuth 2.1 routes, served by your app’s backend or auth provider, served to ChatGPT auth requests and the Inspector

- The CORS headers for your frontend assets, served by your app’s backend or CDN to ChatGPT browsers making widget requests

For the MCP and OAuth 2.1 routes, since they are inherently cross-origin APIs, we recommend just setting a permissive <inline-code>Access-Control-Allowed-Origin: *<inline-code> header. If built correctly, your MCP has auth built in, so there’s no danger in allowing cross origin requests, because any cross-origin attackers will need to present a valid auth token before they can actually make MCP calls.

For the Widget assets, you’ll need to consider both your development time CORS headers, as well as those for production. If using Vite or similar, you can configure it to set CORS headers for asset requests. OpenAI serves the widgets by default on "https://web-sandbox.oaiusercontent.com" , so we recommend allowlisting that origin in your CORS config:

Follow for more

We’re going to continue building more ChatGPT apps and sharing more as we go, so if you’d like to keep hearing, find us on X and at https://gadget.dev. And if you have any questions, feel free to reach out on our developer Discord.