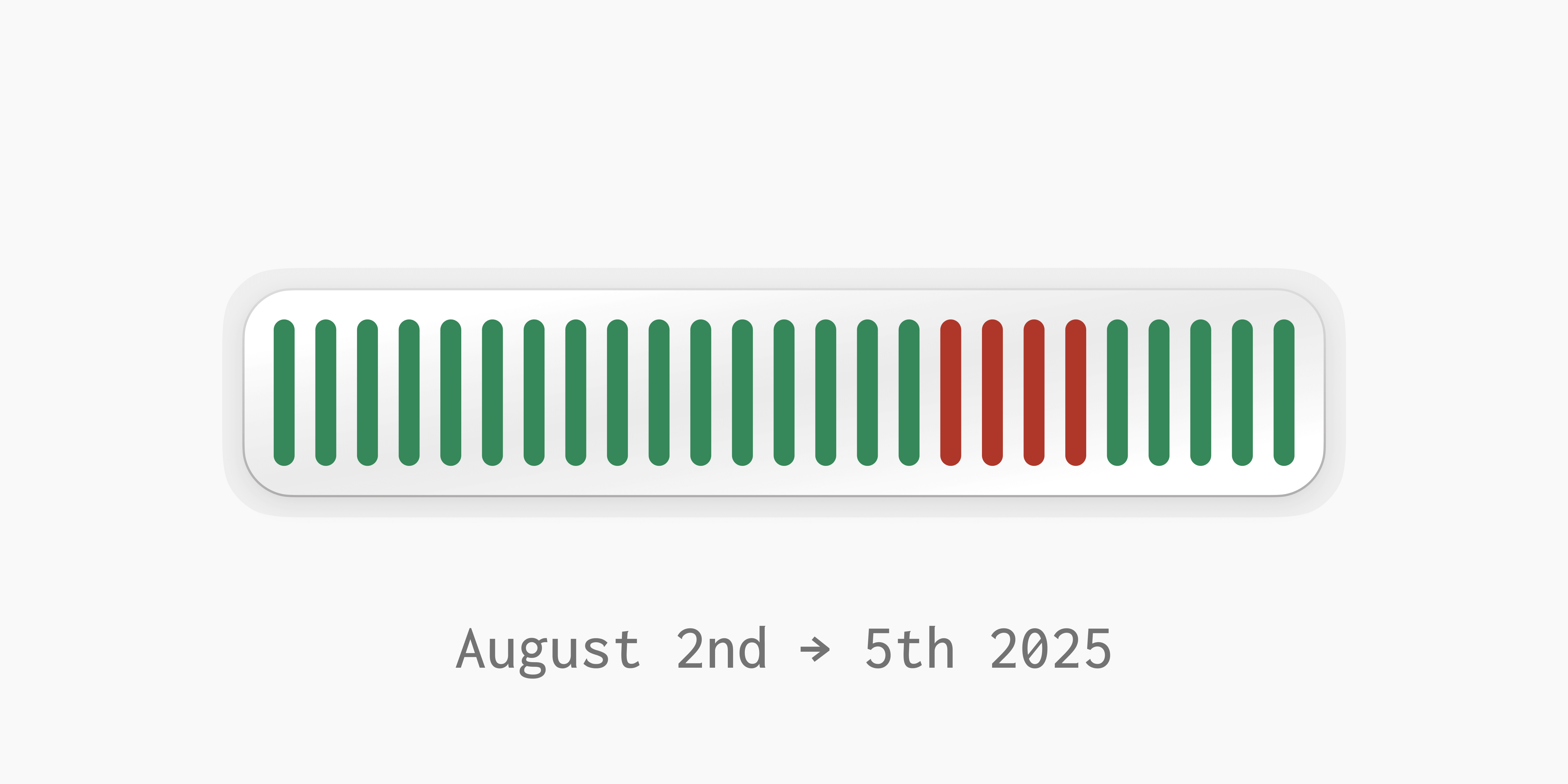

Incident report: August 2 - 5 Partial Outages

Between Saturday, August 2 and Tuesday, August 5, Gadget apps experienced three partial outages. We apologize deeply for this disruption and the issues it may have caused for you and your end users. This report details the causes of the outages and outlines how we will prevent them in the future.

We know your apps rely on Gadget to be up and functional, and during these incidents, we did not deliver. We’ve analyzed affected applications to discount any excessive request time caused by these outages. If you have any questions or concerns about these incidents or the impact on your bill, please reach out to us on Discord.

Summary

- Incident 1: PgBouncer pool pressure – Saturday, Aug 2 (~2:00 AM EDT – ~10:00 AM EDT): Some apps experienced a much higher rate of

GGT_DATABASE_OPERATION_TIMEOUTorGGT_TRANSACTION_TIMEOUTerrors. This was caused by unavailable connections within a subset of our PostgreSQL connection poolers, leading to elevated latency and intermittent errors on database operations. The connection pool pressure was caused by one broken workload holding many idle database transactions longer than expected, consuming an unfair share of pooled connections. - Incident 2: Background migration & CPU saturation – Tuesday, Aug 5 (3:27 PM EDT – ~5:30 PM EDT, with follow-up effects into the evening): Apps got intermittent 502 Bad Gateway or

GGT_DATABASE_OPERATION_TIMEOUTerrors. This was caused by a Gadget platform background migration task that mistakenly triggered a large wave of TypeScript client rebuilds on one of the platform worker queues. In high volumes, these client rebuild jobs were so CPU‑intensive they saturated some individual servers' total available CPU, causing key services like our load balancers to be CPU-starved and fail to process requests. - Incident 3: DB timeouts & Redis eviction – Tuesday, Aug 5 (10:44 PM EDT – ~12:00 AM EDT): Apps saw elevated latencies, delayed background work, and some

GGT_DATABASE_OPERATION_TIMEOUTerrors. This was caused by a GCP issue causing massive packet loss from a select few nodes in the main Gadget cluster. The affected nodes remained online and operational but with extremely limited network bandwidth, which caused a number of system-wide issues. Processes on the slow nodes could still read from key systems like our Redis cache or our Postgres databases, but read so slowly that they caused a pile-up of server-side queues within these systems. The issue was resolved as we rescheduled affected workloads off the slow nodes, and the provider incident abated.

Incident Impact

- User‑facing: Intermittent 5xx responses and elevated latency during the windows above; some background actions delayed; a subset of production environments saw client/schema mismatches until clients were regenerated.

- Scope: Impact varied by zone and app; the worst windows were ~4:00 – 5:30 PM EDT Tuesday (CPU saturation) and 10:44 PM – ~12:00 AM EDT Tuesday (DB/cache/network coupling).

- Data durability: We see no evidence of data loss. Webhooks, background tasks, and syncs retried successfully as systems recovered.

We have issued service credits to all accounts affected. If you think there’s a billing impact beyond what has already been credited, please contact support to discuss.

Across these windows, some applications experienced intermittent 5xx errors, elevated latency, delayed background work, and, for apps touched by the migration, schema/client mismatches until their clients were regenerated. We have no evidence of data loss, and retries and reconciliations processed delayed work once services recovered.

Incident 1 — PgBouncer pool pressure (Sat, Aug 2)

Timeline

- ~2:00 AM EDT: PgBouncer active connections and query duration spike sharply for one availability zone for one shard of Gadget applications.

- ~10:00 AM EDT: Metrics return to normal before intervention; later analysis finds one app holding a large number of idle transactions, tying up connections. Other zones and shards remained healthy.

Analysis

An atypical workload in a single app held many open but idle transactions that timed out after issuing writes. Because our PgBouncer pools connections are shared among all the apps on a shard, those idle transactions consumed a large portion of the available connections for the impacted shard. This raised query latency and caused intermittent failed queries, despite the database having plenty of capacity. This unique workload evaded Gadget’s tenant fairness protections, including multiple rate limits, per-app transaction start throttling, and lazy connection allocation.

The big issue here is that Gadget allowed one workload to disrupt others, which is a violation of a key design requirement of the platform: Individual applications must be able to access their guaranteed resources at all times. In this instance, we failed to guarantee that.

We’ve since shipped several new mitigations for this issue.

- We fixed the source of the issue by adding a new rate limiter that measures how much idle time individual applications spend in transactions, and throttles applications before they can consume more than their fair share of the connection pool. The pool total limit is quite high, as is the new rate limit, such that only this erroneous workload would be affected, and normal application operation is not. Since the incident, no applications have tripped the rate limit, but those that do will get 429 errors instead of occupying too many connections with idle transactions.

- We’ve added a number of paging alerts in this area so that we can detect and respond to partial failure modes of this nature immediately.

Incident 2 — Background migration & CPU saturation (Tue, Aug 5)

Timeline

- 3:27 PM EDT: A background migration task enqueued with a low concurrency generates many TypeScript client rebuilds as a side effect, exhausting a platform worker queue.

- ~4:00 PM EDT: Workers performing the TypeScript client rebuilds exceed their CPU allowance by a wide margin; nodes hit 100% CPU; external health checks begin to fail. Foreground and background requests intermittently fail.

- Mitigation: We add Kubernetes CPU limits to the offending workers and stop the migration and queued work. We manually terminate and restart unhealthy services impacted by CPU saturation.

- ~5:30 PM EDT: Foreground traffic largely recovers; CPU returning to normal.

- ~6:00 PM EDT: We discover the migration changed production GraphQL schemas in a backwards‑incompatible way. We begin client regenerations for impacted apps.

- Aug 6 ~3:30 PM EDT: Client regeneration is complete, we continue to monitor.

Analysis

Gadget often runs background migrations to ship new products or fix bugs safely. In this instance, we were attempting to fix a bug in Gadget’s Shopify connection, where the type of a particular field had been captured incorrectly by our metadata system for the latest Shopify API version. The migration to fix this was enqueued to run slowly in the background, making a small change to each environment’s schema, which triggers a background TypeScript client rebuild on Gadget’s platform worker queues.

Those rebuilds transpile generated TypeScript code to JavaScript using the high-performance SWC compiler, which runs as a native extension in its own thread within our Node.js worker pods. To make TypeScript client rebuilds as fast as possible, we run these transforms with a high degree of parallelism, spawning many SWC transpiler threads at once for each rebuild. These threads result in a temporary spike in CPU demand on the worker pod doing a transform, but because the rebuilds are usually infrequent, we deemed this acceptable.

However, the migration changed many environments in rapid succession, violating our assumption of infrequent rebuilds. This meant our Node.js worker pods consumed far more CPU than their steady state, as each rebuild job spawned the same large number of SWC transformer threads. The individual worker pods did not have hard CPU consumption limits applied, so they were able to consume far more CPU than their request, eventually consuming 100% of the CPU of the nodes they run on. Other critical workloads for Gadget’s infrastructure were co-located on the same nodes as the Node.js workers, starving the other key workloads of CPU, causing widespread timeouts.

To address this, we’ve made several changes for each level of cause:

- We’ve limited the number of SWC transform threads available to each worker. This ensures that even if many rebuild jobs need SWC threads at the same time, they will be throttled instead of bursting further, limiting peak CPU demand regardless of what anomalous workloads appear in the future.

- We’ve added hard CPU limits to these Node.js worker pods, along with many other worker pods. CPU limits incur a slight overhead when enabled, but will restrict any workload from consuming all of the CPU resources on a machine. We apply these limits only to workloads where the overhead is negligible or there is substantial risk from the limits’ absence.

- We’re further isolating our critical path workloads, like our load balancers and connection poolers, to a dedicated set of servers that won’t run anything else. This will allow these services to remain up and healthy in the face of unexpected resource consumers in the future.

And finally, we’ve also updated our alerts and monitoring to better detect runaway resource consumers like this much faster.

Separately, the migration modified production schemas in a backwards‑incompatible way that required client regeneration to work to keep functioning. Modifying production schemas violated an internal rule where we require any behavior change made to an application to be opted into by our users. If a user doesn’t opt in, then no change should happen, but in this case, it did. Due to CPU saturation, client regeneration was failing, meaning not all clients completed the regeneration quickly, lengthening the time that the incompatibility created application-impacting errors. Applications affected by this change received an email with more details and a description of the impact on their app specifically.

Our internal tests and code review processes failed to detect that this migration would result in backwards-incompatible changes to production schemas. We’re revising both to apply much more scrutiny to all migrations we ship. We’re adding new snapshot fuzzer tests that ensure existing applications remain unchanged by each new migration shipped. We’re also requiring internal double-opt-ins before any code makes behavior changes to production environments, making more humans confirm the change is safe. Future migrations should never adjust production environment schemas.

Incident 3 — DB timeouts & Redis eviction (Tue, Aug 5 late evening)

Timeline

- 10:44 PM EDT: A GCP networking degradation incident in us‑central1 begins around this time. Alerts for database connection timeouts fire; external checks failing; errors appear across various internal and app UIs.

- 11:30 PM EDT: We identify how the networking degradation is causing connection issues on other healthy nodes and quarantine the slow nodes. Rescheduling the two affected connection pooler pods returns the cluster to health.

- 11:50 PM EDT - 12:00 AM EDT: Our Redis instance shows a near‑zero hit rate, causing elevated app API latencies due to constant cache misses

- ~12:00 AM EDT: As provider networking stabilizes, Redis refills, connection poolers remain healthy, and database timeouts subside

Analysis

A network packet loss event in one GCP availability zone led to cascading failures throughout the system due to impacts on other services outside that one zone. Gadget is designed to be resilient to zonal outages in our underlying cloud provider. Gadget runs elements of each service, balanced across 3 different availability zones, ensuring healthy replicas in every zone. Gadget’s automated health checks will identify unhealthy service components in any unhealthy zones and stop routing traffic to those services. However, even though this provider outage was zonal, it manifested as a performance issue rather than full node downtime. So, most replicas in the affected zone appeared healthy, as they continued to process small amounts of traffic and passed their health checks.

These slow nodes impacted several services that correctly recovered, but two services outside of the affected zone were impacted and did not automatically heal:

- Our Postgres connection poolers

- Packet loss caused slow reads, leading to excessive connection pool pressure as the slow clients held onto their leases from the pool until they could finish their read.

- Our Redis caches

- Slow client reads caused a buildup of data allocated by Redis to each client’s output buffer. The particularly problematic Redis instance was running with the default configuration for maxmemory-clients, which allows 100% of the instance's memory to be consumed by output buffers. Redis serves a high number of clients, which consumes (by design) a high amount of client buffer during normal operation. Enough slow clients lowered the consumption throughput of the buffers that the Redis instance’s memory exhausted. As unconsumed buffers grew, the memory pressure caused a complete cache eviction and a downstream latency impact on the rest of Gadget that relies on this cache.

Gadget’s manual remediations to reschedule affected replicas, and Google’s eventual correction of the packet loss issue allowed the system to recover.

To mitigate this issue, we’ve made several changes.

- We’ve reconfigured all our Redis instances to have much lower limits for maxmemory-clients, which ensures that slow clients can't cause resource exhaustion in our caching layer.

- Gaps in our monitoring have been corrected, enabling alerts for high packet loss from any VM or zone so we can diagnose and mitigate cloud provider issues like this more quickly in the future.

Our commitment

At Gadget, we consider ourselves ultimately responsible for the uptime of the apps on the Gadget platform, so we must be robust to cloud provider issues like this and overcome them seamlessly, which didn’t happen in this case. It’s our responsibility to handle these failures for our customers regardless of their nature, and we have several big infrastructure projects in the pipeline to improve our posture here, like multi-region support.

Our uptime and reliability goals were not met during these incidents, and for this, we apologize. We’ve completed our root cause analysis process and shipped the most important mitigations for these issues now, and we’ll continue to ship better guardrails around migrations, stronger isolation between critical and bursty workloads, and clearer alerting the moment something drifts out of bounds. We’ll continue to publish follow‑ups as these changes land and will keep real‑time updates at https://status.gadget.dev.

If your app was impacted and you need help, or if you want to talk through any detail here, please reach out directly on Discord.

Harry Brundage

CTO, Gadget